We'll show you a simple way to set up NGINX proxy manager to make your local Ollama installation available on your local network.

Step 1: Installing Ollama for Windows

The guide assumes that Ollama is installed in Windows version. All you have to do is download and run the installer. After installation and starting Ollama is available exclusively at the address http://127.0. 0.1:11434 and if the address of your computer on the local network is e.g. 192.168.31.146, Ollama on port 11434 will not work. This is I will try to fix it by installing a proxy manager, which will help us route the addresses.

Step 2: Install NGINX Proxy Manager

Let's start Docker Desktop and create a new filec:/nginx/docker-compose.yml

version: '3'

services:

app:

image: 'jc21/nginx-proxy-manager:latest'

container_name: nginx-proxy-manager

ports:

- '80:80'

- '81:81'

- '443:443'Now, we start the Terminal in the c:/nginx folder and start the NGINX container installation.

docker compose upAfter installation, Proxy Manager is available at http: //localhost:81/

Login using the default login data:

Email: admin@example.com

Password: changemeAfter login the program prompts us to change the login data.

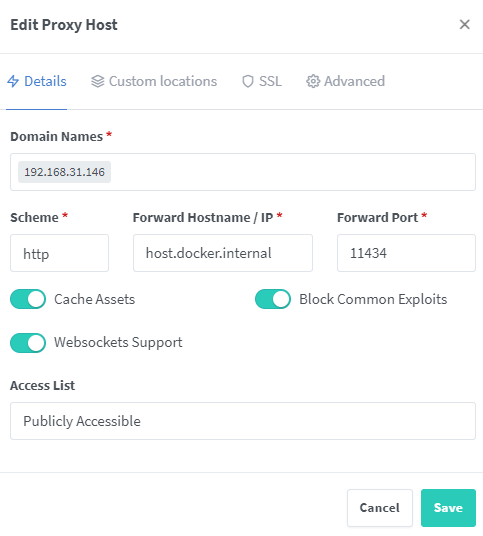

Step 3: Setting Proxy host for Ollama

- click on the menu "Proxy hosts"

- next on the button "Add Proxy host"

- Domain Names: 192.168.31.146 (replace this address with the network address of the computer on which Ollama is running!)

- Forward Hostame / IP: add host.docker.internal

- Forward Port: 11434

- Enable Cache Assets, Websockets Support and click on Save

Forward Hostname host.docker.internal is used mainly for the reason that NGINX is running in a docker container with its own network structure, so 127.0.0.1 will not work for us in such case.

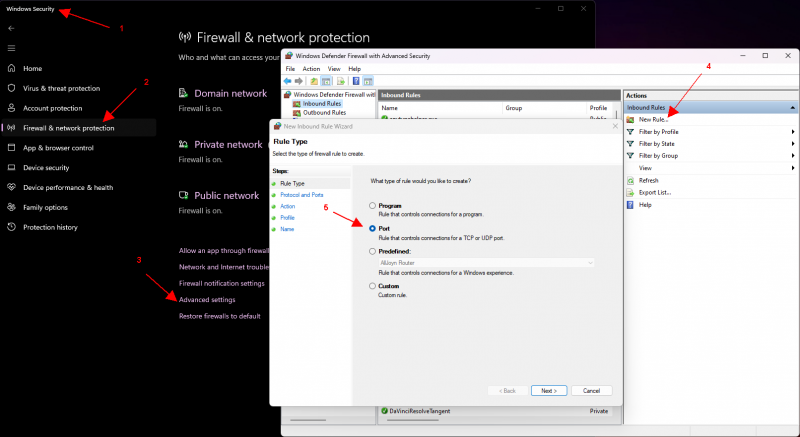

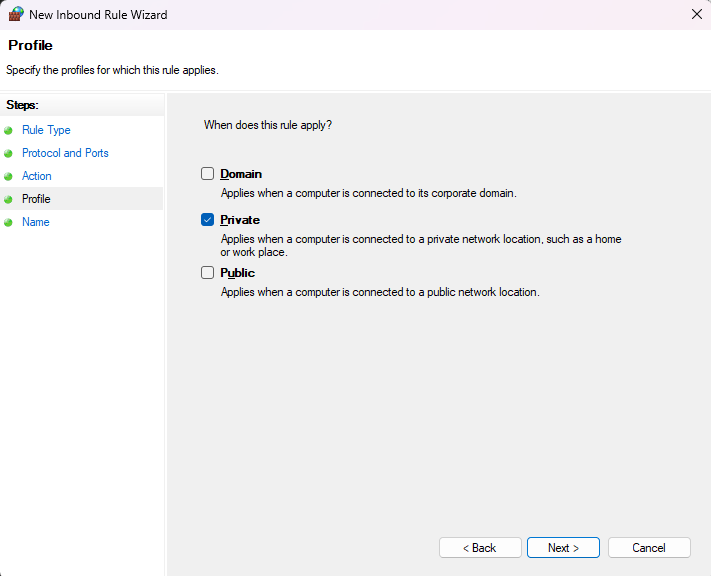

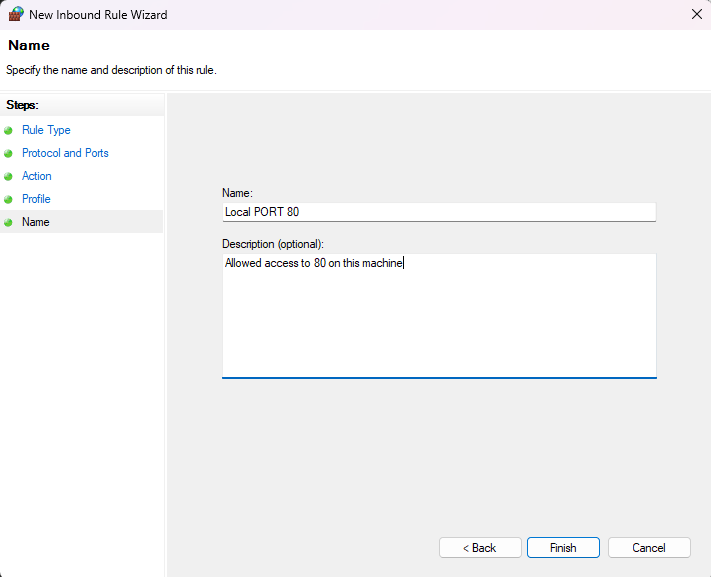

Step 4: Setting Windows Firewall

Now we must allow access to the computer from other devices in our network. We will show how to set a rule for Windows Defender Firewall. If you use another firewall or antivirus, it will be necessary to enable port 80 in a similar way.

Done!

Now Ollama is available at the address of your computer in the local network. The next step is up to the creativity of each researcher . You can access Ollama Api both from specific chat applications and for example when developing your applications.

Simplicity has to be different

so that was a complicated and now TEN easier method. Do not run Ollama via shortcuts, but start it via Windows command line.

set OLLAMA_HOST=0.0.0.0

set OLLAMA_ORIGINS=*

ollama serveProbably you need to Allow TCP Port 11431 inside the Windows Firewall for incomming connection. And Thanks to this, Ollama will be accessible for every device on your local network.